OS: Virtual Memory

- OS

- 2024-12-01

- 769 Views

- 0 Comments

Author:

Haibin Lai

12211612

OS: Virtual Memory - Haibin's blog

Q1 Address Translation

Explain how do the CPU hardware and the operating system cooperate in the procedure of address translation.

Ans:

The hardware-based address translation is for the virtualization of memory. We want programs on computer can only access its own memory space and protect each program from affecting each other.

For every program, they though their address space starts from 0 to 16KB, contains all the memory reference. So the hardware and OS should make the memory space transparent to every program.

To ensure that, the CPU hardware provide 4 important feature: MMU, base and bounds regs, exception trigger, privileged mode and instruction.

Memory Management Unit (MMU) is to help transform the program virtual memory space to the real physical space. And CPU provides base and bounds register to make sure that the program's access is in its memory. If the program has a wild pointer that try to access illegal memory space like OS or other program's space, a exception will trigger. What's more, the base and bounds register should be priviledged that only OS can handle them. So, a privileged mode and privileged instruction that change the register should be provided.

As for OS, we need to implement 3 essential thing to ensure address translation: Memory management, base and bounds regs management, exception handler.

For memory management, OS should remember where the program allocate the memory. OS should maintain a free list for memory management. When creating a process, OS will find a free physical space for process, then point out that this place is occupied. Also, when the process is terminated, the place becomes free again. Also, OS should remember the base and bounds register for every process, storing them in PCB. So that even though in context switch the former base and bounds reg are flushed, they still are stored in OS's PCB. As for exception, when an exception raised by hardware, OS can handle this fault like killing the process to prevent others from malicious process.

Q2 Segmentation and Paging

Read Chapter 16 ”( ( https://pages.cs.wisc.edu/~remzi/OSTEP/vm-segmentation.pdf) and chapter 18 https://pages.cs.wisc.edu/~remzi/OSTEP/vm-paging.pdf ) of “Three Easy Pieces” and compare segmentation and paging. Your answer should cover all aspects (e.g., size of chunks, management of free space, context switch overhead, fragmentation, status bits and protection bits, etc.) and compare them side-by-side.

Ans

Segmentation

The idea of virtual memory is solved by the cooperation of OS and Hardware. However, a new problems comes out. Simply using base and bounds register will waste a lot of unused memory in program.

|-------|

| code |

| |

| heap |

| free |

| free |

| stack |

|-------|To do that, a basic idea is to give every heap, code and stack a base and bounds register, that is segement register. Segement register contains the base address, size, growth direction and protect bits. But this allocation is coarse-grained.

To support multiple segement, we need a segement table to maintain all the segements' status, so that it can handle fragmentation. And for the memory that is release, we need to coalescing the free space and new release space. So how to schedule a divide plan for fast and lease segement is essential, which we can use best fit, worst fit and first fit etc. For coalescing, we have binary buddy allocator algorithm to allocate memory to program.

Paging

Another method to control memory space is to divide space into fixed length segement ---- paging.

Paging has high flexibility. We can provide abstract whatever the process use address space and we don't need to consider the growth of stack and heap. To maintain pages, we need a page table to store all the address translation.

Here, a virtual address contains virtual page number VPN and offset. The offset is not changed, and VPN will transform into PFN.

offset = VirtualAddress & OFFSET_MASK

PhysAddr = (PFN << SHIFT) | offset

// Extract the VPN from the virtual address

VPN = (VirtualAddress & VPN_MASK) >> SHIFT

// Form the address of the page-table entry (PTE)

PTEAddr = PTBR + (VPN * sizeof(PTE))

// Fetch the PTE

PTE = AccessMemory(PTEAddr)

// Check if process can access the page

if (PTE.Valid == False)

RaiseException(SEGMENTATION_FAULT)

else if (CanAccess(PTE.ProtectBits) == False)

RaiseException(PROTECTION_FAULT)

else

// Access is OK: form physical address and fetch it

offset = VirtualAddress & OFFSET_MASK

PhysAddr = (PTE.PFN << PFN_SHIFT) | offset

Register = AccessMemory(PhysAddr)Summary Table

| Aspect | Segmentation | Paging |

|---|---|---|

| Chunk Size | Variable size (logical segments) | Fixed size (e.g., 4 KB pages) |

| Free Space Management | More complex, prone to external fragmentation. Use algorithm like binary buddy allocator to accerlate and manage it | Simpler, no external fragmentation, but internal fragmentation can occur in pages |

| Context Switch Overhead | Higher, due to segment table management. OS must must load the segment table for the incoming process. | Lower, mainly involves page table updates. Requires loading the page table of the incoming process. |

| Fragmentation | External fragmentation is main concern | Internal fragmentation may occur |

| Utilization | Fine-grained (per segment) | Coarse-grained (per page) |

| Address Translation Mechanism | Segment number + offset, uses segment table | Page number + offset, uses page table |

| Implementation | More complex, requires managing variable-sized chunks | Simpler, fixed-size pages reduce complexity |

| Flexibility | More flexible in terms of memory use | Less flexible due to fixed page size |

| Protection bits and Status bits | present/absent, read, write, execute permissions | present, modified; enabling control over access permissions at the page level |

Q3 Virtual Page address

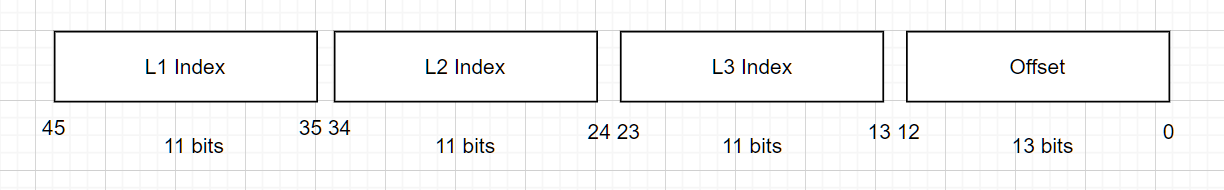

Consider a system with the following specifications:

46-bit virtual address space

Page size of 8 KBytes

Page table entry size of 4 Bytes

Every page table is required to fit into a single page

How many levels of page tables would be required to map the entire virtual address space?

Please document the format of a virtual address under this translation scheme. Briefly explain your rationale.

Answer

Virtual address space is 46 bit with $2^{13}$ Bytes Page size, so

$$

Number \ of \ Page = 2^{46} / 2^{13} = 2 ^{33}

$$

Page table entry size is 4 Bytes, so

$$

\ Entries \ number \ per \ page \ table = (Page \ size) / (PTE \ size) = 2^{13} / 4

= 2^{11} $$

For the level 1 page table it can match $2^{11}$ entries. And each sublevel also need maps $2^{11}$ entries.

$$

Number \ of \ Level = 2^{33} / 2^{11} = 3

$$

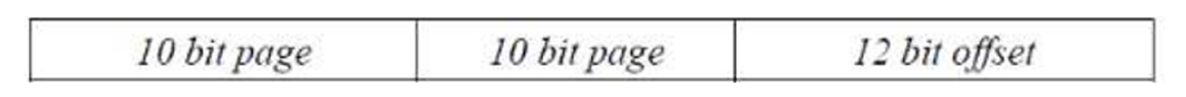

So, the format of a virtual address is:

Q4 Virtual Address Space

Consider a system with following specifications:

Both virtual address space and physical address are 32bits.

Page table entry size of 4Bytes

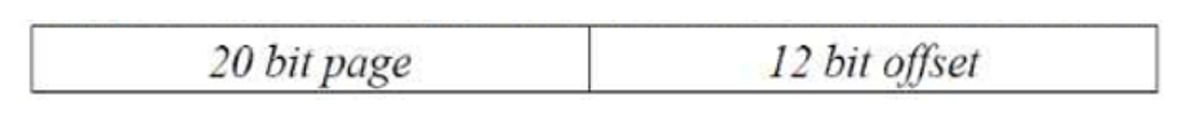

(a) Suppose it uses 1-level page table, the format of the translation scheme is

What is the page size? What is the maximum page table size?

$$

Page \ size = 2^{12} \ bytes = 4096 \ bytes

$$

Maximum page table size:

$$

Maximum \ page \ table \ size = 2^{20} \times 4 \ bytes = 4 MB

$$

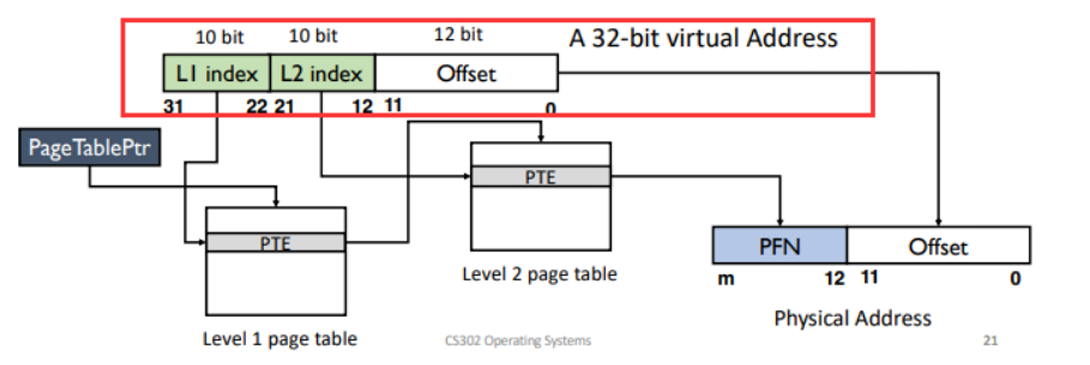

(b) Suppose it uses 2-level page table, the format of the translation scheme is

Please write down the 1-st level page number and its offset in decimal (base 10) of virtual address 0xC302C302 (base 16).

Please write down the 2-nd level page number and its offset in decimal (base 10) of virtual address 0xEC6666AB (base16)

0xC302C302 = b'1100 0011 0000 0010 1100 0011 0000 0010

So 1-st level page numver:

Page index: b'1100 0011 00 = 780 (decimal)

Page offset: b'0011 0000 0010 (binary) = 770 (decimal)

0xEC6666AB = b'1110 1100 0110 0110 0110 0110 1010 1011

Page index: b'10 0110 0110 (binary)

Page offset: b'0110 1010 1011 (binary) = 1707

Q5 Buddy System Allocator

Please use the buddy_system_allocator crate to do allocation and deallocation, here are the recommended initialization, and corresponding alloc and dealloc functions:

let mut allocator: buddy_system_allocator::FrameAllocator<32> = buddy_system_allocator::FrameAllocator::new();

allocator.add_frame(0, 64);

let base = allocator.alloc(4).unwrap();

allocator.dealloc(base, 4);Steps:

- allocate 4 frames

- allocate 4 frames

- deallocate the 4 frames in step 2

- allocate 8 frames

- allocate 2 frames

Output the starting and ending addresses of the frames allocated in steps 1, 2, 4, and 5, and take a screenshot. Please provide detailed explanations for each allocation. Output template

[Step 1 allocation] start: {}, end: {}.

[Step 2 allocation] start: {}, end: {}.

[Step 4 allocation] start: {}, end: {}.

[Step 5 allocation] start: {}, end: {}.Ans

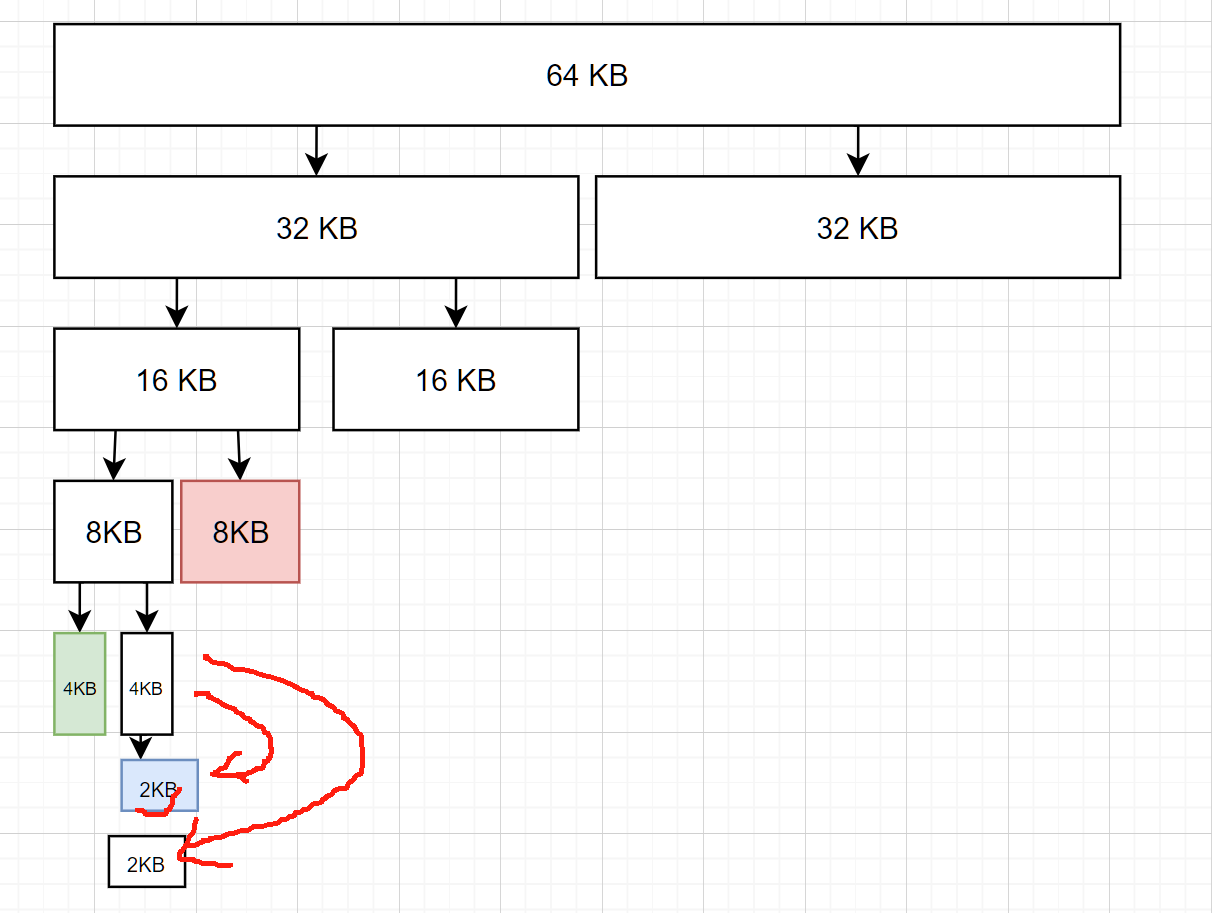

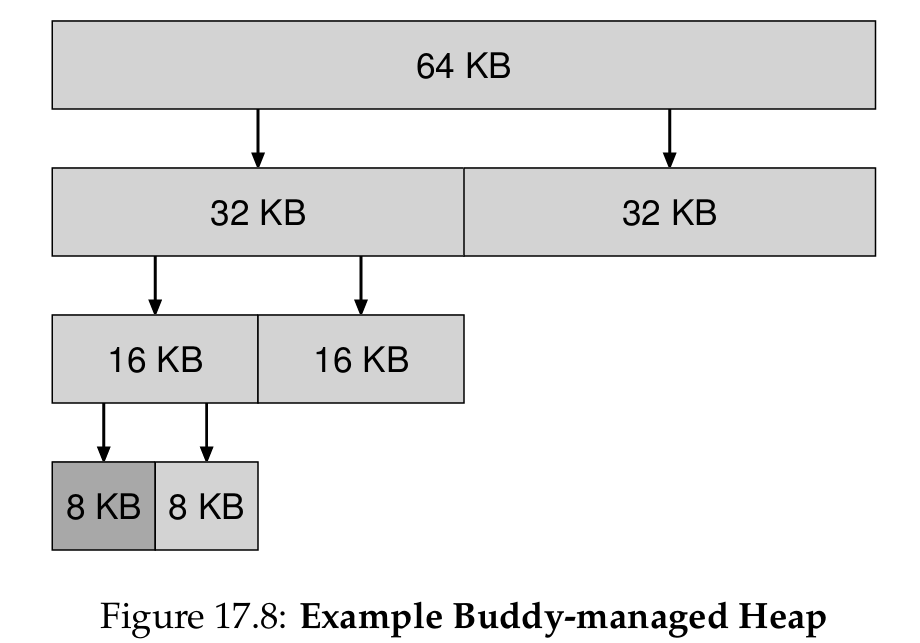

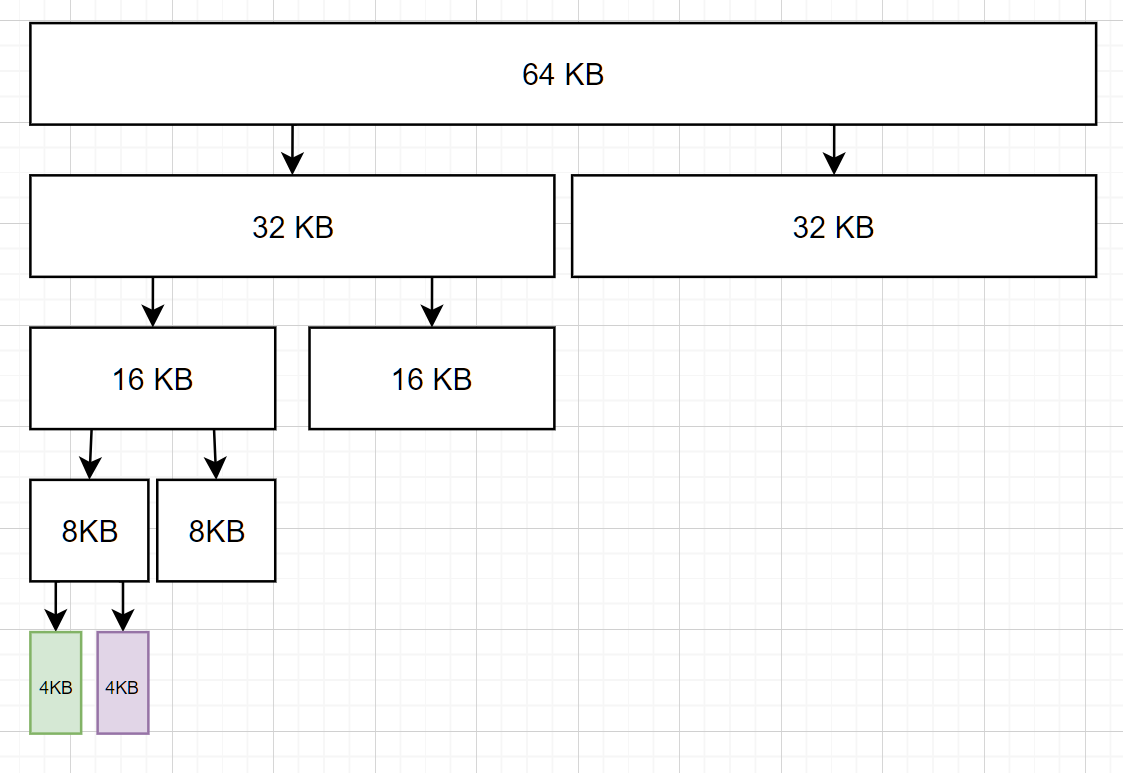

Ref: OSTEP 17single.dvi

In such a system, free memory is first conceptually thought of as one

big space of size $2^N$. When a request for memory is made, the search for

free space recursively divides free space by two until a block that is big

enough to accommodate the request is found (and a further split into two

would result in a space that is toosmall). At this point, the requested

block is returned to the user.

[Step 1 allocation] start: 0, end: 4.

[Step 2 allocation] start: 4, end: 8.

[Step 4 allocation] start: 8, end: 16.

[Step 5 allocation] start: 4, end: 6.

allocation 1:

[Step 1 allocation] start: 0, end: 4.

Allocation 2:

[Step 2 allocation] start: 4, end: 8.

Allocation 3:

Step 4 allocate 8 frames

[Step 4 allocation] start: 8, end: 16.

Step 5 allocate 2 frames

[Step 5 allocation] start: 4, end: 6.